ConcurrentHashMap Interview Questions In Java.

Imagine a scenario where we have frequent reads(get) and less writes(put) and need thread safety,

Can we use Hashtable in this scenario?

No. Hashtable is thread safe but give poor performance in case of multiple thread reading from hashtable because all methods of Hashtable including get() method is synchronized and due to which invokation to any method has to wait until any other thread working on hashtable complete its operation(get, put etc).

Can we use HashMap in this scenario?

No. Hashmap will solve performance issue by giving parallel access to multiple threads reading hashmap simultaneously.

But Hashmap is not thread safe, so what will happen if one thread tries to put data and requires Rehashing and at same time other thread tries to read data from hashmap, It will go in infinite loop.

Infinite loop problem discussed in detail: Infinite loop in HashMap

ConcurrentHashMap combines good features of hashmap and hashtable and solves performance and thread safety problem nicely.

How HashMap works

Below diagram shows how hashtable/hashmap look like,

ConcurrentHashMap added one Array on top of it and each index of this additional array represents complete HashMap. Additional array is called Segment in ConcurrentHashMap.

Architecture of ConcurrentHashMap looks like below,

Putting key-value pair:

1. Putting key-value pair in ConcurrentHashMap requires first identifying exact index in

Segment array.

(Once Segment array index is identified, Now flow will be exactly same as putting the data in

hashmap/hashtable.)

2. After identifying index in Segment array, next task is to identify index of internal bucket/array

present in internal hashmap as shown in figure above.

3. After identying bucket(internal array index), iterate key-value pairs and check each key with key

to store, wherever match is found replace stored value with value to store.

If there is no match, store key-value pair at the last of list.

Getting key-value pair:

1. Getting key-value pair in ConcurrentHashMap requires first identifying exact index in

Segment array.

(Once Segment array index is identified, Now flow will be exactly same as getting the data from

hashmap/hashtable.)

2. After identifying index in Segment array, next task is to identify index of internal bucket/array

present in internal hashmap as shown in figure above.

3. After identying bucket(internal array index), iterate key-value pairs and match each key with

given key, wherever match is found return value stored against key.

If there is no match, return null.

Hashtable is not efficient beacause it uses map wide lock, it means lock is applied on map object itself,

So if 2 threads tries to call hashtable.get(key),

Thread T1 calls to get() method will acquire a lock on hashtable object and then execute get() method. (Lock is on complete 'hashtable object')

Now if Thread T2 calls hashtable.get(key) method, then it will also try to acquire lock on hashtable object, but T2 will not able to acquire lock as lock on 'hashtable' is currently held by T1, So T2 waits until T1 finishes get() operation and release lock on hashtable object.

ConcurrentHashMap works bit different here and instead of locking complete map object it Locks per Segment.

It means instead of single map wide lock, it has multiple Segment level lock.

So 2 Threads can execute put operation simultaneously by acquiring lock on different Segments.

Thread T1 calls concurrentHashMap.put(key, value), It acquires lock on say Segment 1 and invokes put method.

Thread T2 calls concurrentHashMap.put(key, value), It acquires lock on say Segment 4 and invokes put method.

Both threads doesn't interfere with each other and both can proceed simultaneously as they are working on separate Segment locks.

This is how ConcurrentHashMap improves Performance and provide Thread safety as well.

Same Segment/Different Segment : Yes.

Two threads T1 and T2 both can simultaneously read data from same Segment or different Segment of CHM simultaneously without blocking each other.

Write Operation: put(key, value)

Different Segment :Yes

Multiple threads can write data to different Segment of CHM simultaneously without blocking each other.

Same Segment : No

Multiple threads CANNOT write data to same Segment of CHM simultaneously and need to wait for one thread to come write operation and then only other write operation can be proceed.

Read-Write Operation: get and put

Say T1 is writing data in Segment 1 and T2 is reading data from same Segment 1, can read be allowed while writing operation is going on?

YES.

Both operation that is T1 writing and T2 reading can be done parallely.

What data will T2 read if T1 is updating same data?

Retrieval operations (including get) generally do not block, so may overlap with update operations (including put and remove).

Latest updated value present will be returned by get operation that is value updated by most recently completed update operationswill be returned.

Note: Get operations are lock free and can be performed simulateneously irrespective of other thread writing data on same or different Segment of CHM.

ConcurrentHashMap differes from Hashtable in terms of Performance by introducing Segment array.

Each index of Segment array is guarded by a lock for put operation.

Threads working on separate Segments index doesn't affect each other.

By default Segments array size is 16, So maximum 16 threads can simultaneously put data in map considering each thread is working on separate Segment array index.

Segment array size is configured using ConcurrencyLevel parameter as shown below,

It takes 3 parameters,

Example:

Load factor is 0.75, it means when average number of elements per map exceeds 150 (intital capacity * load factor = 200 * 0.75 = 150) at that time map size will be increased and existing items in map are rehashed to put in new larger size map.

For more details on Load Factor: Load factor in Map

Concurrency level is 10, it means at any given point of time Segment array size will be 10 or greater than 10, so that 10 threads can able to parallely write to a map.

Segment array size will decide how many parallel Threads will be able to execute put operation on map parallely.

So Segment array size should not be too big or should not be too small because,

Using a significantly higher value than we will waste space and time, and a significantly lower value can lead to thread competition.

If concurrenyLevel is 10 then Segment array size will be 16.

Segment array size = 2 to the power x, where result should be >= concurrenyLevel(in our case it is 10)

Segment array size = 2 to the power x >= 10

Segment array size = 2 ^ 1 = 2 >= 10 (False)

Segment array size = 2 ^ 2 = 4 >= 10 (False)

Segment array size = 2 ^ 3 = 8 >= 10 (False)

Segment array size = 2 ^ 4 = 16 >= 10 (True)

Segment array size is 16.

Example: 2

concurrenyLevel = 8 then Segment array size = ?

Find 2 ^ x >= 8

2 ^ 1 >= 2

2 ^ 2 >= 4

2 ^ 3 >= 8

Segment array size will be 8.

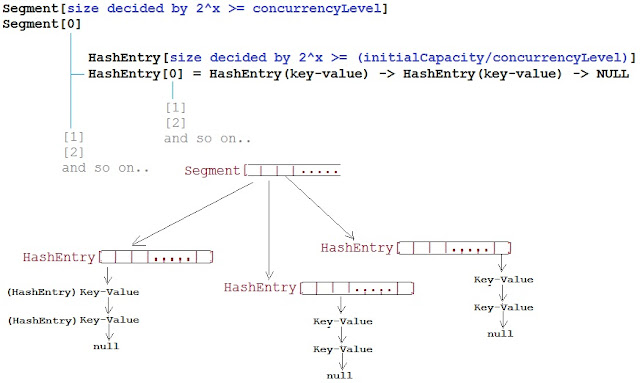

What is HashEntry[]?

We saw that each index of Segment array itself is complete HashMap,

In HashMap the bucket/array is of class Entry[] and in CHM the array is of class HashEntry[].

Lets see how internal architecture of CHM looks like,

How HashEntry[] array size will is calculated?

HashEntry[] array size = 2 ^ x >= (initialCapacity / concurrenyLevel)

Eg: ConcurrentHashMap(32, 0.75f, 4);

HashEntry[] array size = 2 ^ 1 = 2 >= 8(32/4) (False)

HashEntry[] array size = 2 ^ 2 = 4 >= 8 (False)

HashEntry[] array size = 2 ^ 3 = 8 >= 8 (True)

HashEntry[] array size = 8.

It means there will always be capacity of 8 key-value pairs that can be put in CHM after its creation.

Load Factor is a measure, which decides when exactly to increase the HashMap/CHM capacity(buckets) to maintain get and put operation complexity of O(1).

Default load factor of Hashmap/CHM is 0.75f (i.e 75% of current map size).

For more details on Load factor, Please refer: Load Factor in HashMap

Example: If say Thread 1 which is putting data in Segment[] array index 2 finds that HashEntry[] array needs to be rehashed due to exceed Load factor capacity then it will rehash HashEntry[] array present at Segment[] array index 2 only.

HashEntry[] array at other Segment indexes will still be intact, unaffected and continue to serve put and get request parallel.

Question 1. What is the need of ConcurrentHashMap when there is HashMap and Hashtable already present?

Performance and Thread safety are 2 parameter on which ConcurrentHashMap is focused.Imagine a scenario where we have frequent reads(get) and less writes(put) and need thread safety,

Can we use Hashtable in this scenario?

No. Hashtable is thread safe but give poor performance in case of multiple thread reading from hashtable because all methods of Hashtable including get() method is synchronized and due to which invokation to any method has to wait until any other thread working on hashtable complete its operation(get, put etc).

Can we use HashMap in this scenario?

No. Hashmap will solve performance issue by giving parallel access to multiple threads reading hashmap simultaneously.

But Hashmap is not thread safe, so what will happen if one thread tries to put data and requires Rehashing and at same time other thread tries to read data from hashmap, It will go in infinite loop.

Infinite loop problem discussed in detail: Infinite loop in HashMap

ConcurrentHashMap combines good features of hashmap and hashtable and solves performance and thread safety problem nicely.

Question 2. HashMap and Hashtable uses Array and Linkedlist as datastructure to store data, How is it different in ConcurrentHashMap?

If you are not familiar with HashMap and Hashtable, Please go through it first:How HashMap works

Below diagram shows how hashtable/hashmap look like,

|

| Hashmap internal data structure |

Architecture of ConcurrentHashMap looks like below,

|

| Internal structure of ConcurrentHashMap |

1. Putting key-value pair in ConcurrentHashMap requires first identifying exact index in

Segment array.

(Once Segment array index is identified, Now flow will be exactly same as putting the data in

hashmap/hashtable.)

2. After identifying index in Segment array, next task is to identify index of internal bucket/array

present in internal hashmap as shown in figure above.

3. After identying bucket(internal array index), iterate key-value pairs and check each key with key

to store, wherever match is found replace stored value with value to store.

If there is no match, store key-value pair at the last of list.

Getting key-value pair:

1. Getting key-value pair in ConcurrentHashMap requires first identifying exact index in

Segment array.

(Once Segment array index is identified, Now flow will be exactly same as getting the data from

hashmap/hashtable.)

2. After identifying index in Segment array, next task is to identify index of internal bucket/array

present in internal hashmap as shown in figure above.

3. After identying bucket(internal array index), iterate key-value pairs and match each key with

given key, wherever match is found return value stored against key.

If there is no match, return null.

Question 3. How ConcurrentHashMap is efficient in terms of Performance and Thread safety?

ConcurrentHashMap provides better Performance by replacing the Hashtable's map wide lock to Segment level lock.Hashtable is not efficient beacause it uses map wide lock, it means lock is applied on map object itself,

So if 2 threads tries to call hashtable.get(key),

Thread T1 calls to get() method will acquire a lock on hashtable object and then execute get() method. (Lock is on complete 'hashtable object')

Now if Thread T2 calls hashtable.get(key) method, then it will also try to acquire lock on hashtable object, but T2 will not able to acquire lock as lock on 'hashtable' is currently held by T1, So T2 waits until T1 finishes get() operation and release lock on hashtable object.

|

| Hashtable vs ConcurrentHashMap |

It means instead of single map wide lock, it has multiple Segment level lock.

So 2 Threads can execute put operation simultaneously by acquiring lock on different Segments.

Thread T1 calls concurrentHashMap.put(key, value), It acquires lock on say Segment 1 and invokes put method.

Thread T2 calls concurrentHashMap.put(key, value), It acquires lock on say Segment 4 and invokes put method.

Both threads doesn't interfere with each other and both can proceed simultaneously as they are working on separate Segment locks.

This is how ConcurrentHashMap improves Performance and provide Thread safety as well.

Question 4. Can multiple threads read and write from same or different Segments of ConcurrentHashMap simultaneously?

Read Operation: get(key)Same Segment/Different Segment : Yes.

Two threads T1 and T2 both can simultaneously read data from same Segment or different Segment of CHM simultaneously without blocking each other.

Write Operation: put(key, value)

Different Segment :Yes

Multiple threads can write data to different Segment of CHM simultaneously without blocking each other.

Same Segment : No

Multiple threads CANNOT write data to same Segment of CHM simultaneously and need to wait for one thread to come write operation and then only other write operation can be proceed.

Read-Write Operation: get and put

Say T1 is writing data in Segment 1 and T2 is reading data from same Segment 1, can read be allowed while writing operation is going on?

YES.

Both operation that is T1 writing and T2 reading can be done parallely.

What data will T2 read if T1 is updating same data?

Retrieval operations (including get) generally do not block, so may overlap with update operations (including put and remove).

Latest updated value present will be returned by get operation that is value updated by most recently completed update operationswill be returned.

Note: Get operations are lock free and can be performed simulateneously irrespective of other thread writing data on same or different Segment of CHM.

Question 5. What is the default size of Segment array? how it is tuned? What is ConcurrenyLevel in case of CHM?

Default size of Segment array is 16.ConcurrentHashMap differes from Hashtable in terms of Performance by introducing Segment array.

Each index of Segment array is guarded by a lock for put operation.

|

| Concurrency level in ConcurrentHashmap |

By default Segments array size is 16, So maximum 16 threads can simultaneously put data in map considering each thread is working on separate Segment array index.

How Segment array size is tuned?

Segment size decides the number of Threads that can paralley write to a map.Segment array size is configured using ConcurrencyLevel parameter as shown below,

ConcurrentHashMap m = new ConcurrentHashMap(initialCapacity, loadFactor, concurrencyLevel)

|

| Concurrenthashmap segment vs Hashmap bucket |

Example:

ConcurrentHashMap m = new ConcurrentHashMap(200 , 0.75f, 10);Initial capacity is 200, it means CHM make sure it has space for adding 200 key-value pairs after creation.

Load factor is 0.75, it means when average number of elements per map exceeds 150 (intital capacity * load factor = 200 * 0.75 = 150) at that time map size will be increased and existing items in map are rehashed to put in new larger size map.

For more details on Load Factor: Load factor in Map

Concurrency level is 10, it means at any given point of time Segment array size will be 10 or greater than 10, so that 10 threads can able to parallely write to a map.

Question 6. What will happen if the size of Segment array is too small or too large?

Choosing correct ConcurrencyLevel is very important because ConcurrencyLevel decides what will be the size of Segment array.Segment array size will decide how many parallel Threads will be able to execute put operation on map parallely.

|

| Concurrenthashmap should not be too low as well as not too high |

Using a significantly higher value than we will waste space and time, and a significantly lower value can lead to thread competition.

Question 6. If we choose ConcurrenyLevel as 10 then what will be size of Segment array? Is Segment array size exactly same as concurrenyLevel? If No, then how is the Segment array size calculated?

Segment array size is calculated based on concurrenyLevel specified but it doesn't mean it will be exactly same as concurrenyLevel.If concurrenyLevel is 10 then Segment array size will be 16.

Segment array size = 2 to the power x, where result should be >= concurrenyLevel(in our case it is 10)

Segment array size = 2 to the power x >= 10

Segment array size = 2 ^ 1 = 2 >= 10 (False)

Segment array size = 2 ^ 2 = 4 >= 10 (False)

Segment array size = 2 ^ 3 = 8 >= 10 (False)

Segment array size = 2 ^ 4 = 16 >= 10 (True)

Segment array size is 16.

Example: 2

concurrenyLevel = 8 then Segment array size = ?

Find 2 ^ x >= 8

2 ^ 1 >= 2

2 ^ 2 >= 4

2 ^ 3 >= 8

Segment array size will be 8.

Question 7. What is HashEntry array and how is the size of HashEntry decided?

Default initial capacity of CHM is 16. It means CHM make sure there is sufficient space to accomodate 16 key-value pairs after CHM is created.What is HashEntry[]?

We saw that each index of Segment array itself is complete HashMap,

|

| Hashentry array in Concurrenthashmap |

static final class Segment<K,V> extends ReentrantLock implements Serializable {

transient volatile HashEntry<K,V>[] table;

}

static final class HashEntry<K,V> {

final int hash;

final K key;

volatile V value;

volatile HashEntry<K,V> next;

}

Lets see how internal architecture of CHM looks like,

|

| How concurrenthashmap works internally in java |

ConcurrentHashMap m = new ConcurrentHashMap(initialCapacity, loadFactor, concurrencyLevel)

HashEntry[] array size = 2 ^ x >= (initialCapacity / concurrenyLevel)

Eg: ConcurrentHashMap(32, 0.75f, 4);

HashEntry[] array size = 2 ^ 1 = 2 >= 8(32/4) (False)

HashEntry[] array size = 2 ^ 2 = 4 >= 8 (False)

HashEntry[] array size = 2 ^ 3 = 8 >= 8 (True)

HashEntry[] array size = 8.

It means there will always be capacity of 8 key-value pairs that can be put in CHM after its creation.

Question 8. How does ConcurrentHashMap handle rehashing while another thread is still writing on another segment/partition?

For understanding rehashing, please understand Load Factor first.Load Factor is a measure, which decides when exactly to increase the HashMap/CHM capacity(buckets) to maintain get and put operation complexity of O(1).

Default load factor of Hashmap/CHM is 0.75f (i.e 75% of current map size).

For more details on Load factor, Please refer: Load Factor in HashMap

|

| How rehashing works in Concurrenthashmap |

In CHM, Every segment is separately rehashed so there is no collision between Thread 1 writing to Segment index 2 and Thread 2 writing to Segment index 5.

Example: If say Thread 1 which is putting data in Segment[] array index 2 finds that HashEntry[] array needs to be rehashed due to exceed Load factor capacity then it will rehash HashEntry[] array present at Segment[] array index 2 only.

HashEntry[] array at other Segment indexes will still be intact, unaffected and continue to serve put and get request parallel.

Exception Handling Interview Question-Answer

Method Overloading - Method Hiding Interview Question-Answer

Advanced Multithreading Interview Questions-Answers In Java

Type Casting Interview Questions-Answers In Java

How Thread.join() in Java works internally

How is ambiguous overloaded method call resolved in java

Enjoy !!!!

If you find any issue in post or face any error while implementing, Please comment.